It was February 1945 in the mangrove morasses of Ramree Island along the coast of Burma. It was here where a dark page of World War II was written. When Japanese soldiers withdrew from a losing fight with Allied soldiers, they trudged into a labyrinth of mud and water that would spell doom into their lives. Turns out this muddy waterway is infested with saltwater crocodiles. The Battle of Ramree Island was a lesser battle of the Burma Campaign, and is remembered not for its implications on the battlefield but for a macabre anecdote: hundreds of Japanese soldiers reportedly were eaten by crocodiles in one evening. While this story was glamorized by the vivid prose of a Canadian naturalist, the modern legend has fascinated imaginations for decades; even though its authenticity is as murky as the wetlands themselves. This is the story of Ramree—where war, wildlife and myth meet. The Burma Campaign and Ramree’s Strategic Significance To fully comprehend the terror of Ramree, we must first turn back the clock to 1945 during which time the Pacific theater of World War II was a seething cauldron of desperation. The Burma Campaign, overshadowed by the wars in Europe and the Pacific Islands, was a brutal struggle to drive the Japanese from their grip on Burma (Myanmar). Ramree Island is a 520-square-mile spot in the chain of the Andaman and Nicobar Islands, and was strategically valuable in its location. Its low-lying terrain was appropriate for an airfield to support Allied action against the mainland, and it was a target that General William Slim’s Fourteenth Army wished to seize. The British XV Indian Corps carried out Operation Matador—a landing operation to take Ramree—during January 1945. The estimated 2,700-man Japanese garrison under General Toshinari Noda had occupied the island since 1942. Encircled and cut off from supplies, the Japanese fought bravely, using the dense jungles of the island and coastal defenses to slow the Allied advance. Intensive naval bombardment and ground assaults by early February shattered their lines. Between 900–1,000 Japanese soldiers, aware of their impending defeat, retreated eastward, with the hope of fighting their way through the mangrove swamps of the island to a more sizable force on the mainland. Little did they know, the Japanese forces would be moving into one of nature’s deadliest traps. Into the Mangroves: A Desperate Retreat Ramree is an environment of twisted roots where every step is in gooey, knee-deep mud. The air is heavily swarmed with mosquitoes that are also carriers of malaria and dengue. Scorpions and venomous snakes have their sanctuaries in the swamps, and the brackish water harbors a far more dangerous enemy. Saltwater crocodiles (Crocodylus porosus) lay dominion over Ramree’s waters, and are the largest modern-day reptiles on Earth growing up to 23 feet long (approx. 7 meters) with bone-crushing jaws. For the Japanese soldiers—exhausted, starving, and weighed down with guns and scarce ammunition—the swamps were a journey into doom. On February 19, 1945, the evening that the retreating Japanese charged through the darkness, the Allies closed in on them. British motor launches roamed up and down the coastal waterways, cutting off escape channels, while ground troops encircled the swamps, shooting stragglers. The soldiers, some of them injured, others dysentery-stricken, had a gauntlet to get through. Hunger clawed at them; the mud grabbed at their boots.. and then, as one version has it, the crocodiles struck. The Crocodile Massacre: Bruce Wright’s Account The most vivid account of the night was given by Bruce Stanley Wright, who was a Canadian naturalist and Royal Canadian Navy officer attached to the Burma Campaign. In his book Wildlife Sketches Near and Far, published in 1962, Wright recorded the terrible account through his following passage: “That night was the most horrible that any member of the M. L. [motor launch] crews ever experienced. The scattered rifle shots in the pitch-black swamp punctured by the screams of wounded men crushed in the jaws of huge reptiles, and the blurred worrying sound of spinning crocodiles made a cacophony of hell that has rarely been duplicated on earth. At dawn the vultures arrived to clean up what the crocodiles had left… Of about one thousand Japanese soldiers that entered the swamps of Ramree, only about twenty were found alive.” Wright’s vivid account, later echoed by conservationist Roger Caras and once featured in the Guinness Book of World Records, helped cement Ramree’s place in popular lore—even though his version was based on secondhand reports rather than direct observation. His description suggests a massacre on horrific scales: scores of men, stunned and caged, being torn limb from limb by crocodiles in a feeding frenzy. British soldiers on the motor launches, stationed at the perimeters of the swamps, heard cries and gunfire throughout the night. However, Wright was not part of the soldiers in the swamps. His evidence was hearsay from motor launch crews, hearing rather than seeing the chaos. The question arises here: did the Japanese really get massacred by crocodiles, or has time been subject to war inflation? Unraveling the Truth: Crocodiles or Pandemonium? Modern scholarship raises a healthy skepticism about how far the crocodile attacks went. In 2000, herpetologist Steven Platt interviewed several of the older Ramree Island residents who lived through the war. Their account, plus military records, shows a far less sensational truth. Platt estimated that only 10–15 soldiers likely died from being attacked by crocodiles, primarily as scavengers gnawed on bodies or isolated stragglers. Most of the Japanese dead—hundreds of the 900–1,000 who had entered the swamps—fell victim to more mundane but no less fatal processes. These included bullets from the Allies, drowning, starvation, malaria, dysentery, or snake and scorpion bites. Saltwater crocodiles are known to be strong, but some researchers and historians feel that the Ramree account had been exaggerated. Furthermore, the swamps themselves were a death sentence. The Japanese, cut off by Allied soldiers, had no access to food or fresh water. Some died in tidal creeks or were trapped in mud, unable to move.

Mike the Headless Chicken—The Rooster That Defied Death

On September 10, 1945 in a dry town in Colorado called Fruita, a farmer by the name of Lloyd Olsen was trying to prepare an evening meal for his family with the help of a chicken. What then followed was no ordinary barnyard mishap. When Olsen’s axe chopped off the head of a young Wyandotte rooster named Mike, the bird didn’t just flop and die. Oh no… it got up, shook off the wound and lived on! For 18 months, Mike the Headless Chicken roamed, primped and pecked his way into legend. This bird was a national sensation and an eerie witness to the resilience of existence. Let us now unfold the story of Mike–the rooster who defied death–together with the people who turned him into a celebrity, plus the curious science that made it all possible. A Fateful Chop in Fruita Fruita in 1945 was a quiet farming community with its orchards and fields far away from the chaos of World War II’s final days. It was at this time when Lloyd Olsen was told by his wife, Clara, to cut up a chicken for her mother’s visit. One of the birds they had available was a five-and-a-half-month-old plump and healthy rooster. This is where Mike debuts. As destiny would have it, Lloyd’s axe descended and chopped off most of Mike’s head—but not entirely. The blade brushed the jugular vein and left one ear, the brainstem, and a section of the skull unharmed. Blood clotted, and far from being dinner, Mike stumbled to his feet; weaving but very much alive. The couple, half-amused yet half-confused, decided to spare Mike. The Olsens subsequently placed him in a crate of apples for the night to see if he’d survive. Next morning, Mike not only looked for life but the now headless rooster was attempting to crow—a gurgling sound, given the fact that he did not possess a beak or vocal cords. The Olsens sensed something extraordinary was before them, and so began feeding him with an eyedropper; filling him with water; and ground corn directly into his open esophagus. Word of the headless wonder rooster eventually spread, and Fruita’s peaceful streets were soon to become a stage. From Barnyard to Big Time Sensation The Olsens’ neighbors were initially suspicious, but soon made visits to the miracle rooster. Within days, local newspapers featured articles dubbing him “Mike the Headless Chicken.” Word filtered through a Salt Lake City promoter named Hope Wade, who thought of making dollars through the unusual bird. Wade enlisted the Olsen couple to take to the road together with Mike as the promoter lured them with promises of fame and wealth. An 18-month journey had started which transformed a farmyard accident into a national phenomenon. Mike’s tour kicked off in 1945, crisscrossing the USA from county fairs to city sideshows. For a quarter, spectators would stare at the headless rooster who strutted around in makeshift enclosures, poked at imaginary grain and even gained weight; ballooning up to near eight pounds. The Olsens also brought a jarred chicken head—purportedly Mike’s, but likely a stand-in—to spice up the act. Posters hyped the bird as “The Headless Wonder Chicken” or “Mike the Miracle Rooster,” and crowds were captivated. Life magazine ran a spread in October 1945, complete with photos of Mike in mid-strut. The article estimated the Olsens earned $4,500 a month during the peak of Mike’s popularity. However, Mike’s fame had its critics. Some ridiculed Mike as a sham and claimed that the Olsens had staged a headless puppet. Others cried animal abuse but Lloyd insisted Mike didn’t show a hint of pain, clucking (or gurgling) happily and preening. To set minds at ease, the Olsens toured the University of Utah in 1945, and scientists examined Mike. Their diagnosis was what appeared impossible: Mike survived, was healthy, and functioned, thanks to a biological fluke that kept his brainstem—the control center for basic reflexes—intact. The scientists were stunned, and Mike grew greater in fame. The Science of Survival How could a chicken live without a head? The explanation is due to the peculiar anatomy of gallinaceous birds…otherwise known as landfowls. Chickens (and also related birds) have a brainstem at the back of the skull, which takes charge of automatic functions like breathing, heart rate and simple movement. When Lloyd’s axe failed to strike Mike’s jugular and brainstem, it killed just enough of the nervous system to keep him alive. Clotted wound prevented him from dying from blood loss, and eyedropper feedings by the Olsens sustained the rooster. Mike could walk, balance and even attempt a crow, although his “voice” was a gurgle while air flowed through his esophagus. Mike’s reflexes were intact and, with careful treatment, he would live forever. This was not entirely unknown—headless chickens sometimes thrash about after they have been slaughtered in response to reflex reactions—but Mike’s prolonged survival was extraordinary. That he could gain weight suggested that his body was absorbing nutrients correctly, a compliment to the care taken by the Olsens. Mike’s life, though, was not without problems. Ongoing feeding and sanitation were required to prevent infection or choking. The Olsens also brought with them a syringe to suction out mucus from his esophagus. Mike’s strength amazed scientists but raised questions about consciousness. Did he hurt? Was he “conscious”? Without a cerebrum—the center of perception in the brain—Mike likely operated on autopilot; a living automaton by reflex. This did not diminish his popularity as it made him a miracle instead. Mike’s Cultural Moment Mike’s popularity flourished in the post-war euphoria of 1945–47, when the USA wanted distraction. The war was won, and stories of tenacity—human or otherwise—rang a chord. Mike was a symbol of determination, a rooster who would not quit. He appeared on stage with other sideshow acts—fire-eaters, sword swallowers—but Mike was the star and a living paradox that grabbed tremendous attention. His performances took him to Los Angeles, New York and Chicago, where people gawked at his gait. Children screamed; adults debated whether he

Origins of the Cold War & Its Shadows on Modern Geopolitics

The Cold War was a tactical battle between two superpowers with bold ambitions of the globe’s future. Born out of World War II, this decades-long tension between the United States and the Soviet Union was not your average war—no direct battle between the two powerhouses took place. Rather, it was a war of ideologies that was fought through proxy wars, spy games and the very real possibility of nuclear showdown. Though the Cold War officially ended with the collapse of the Soviet Union in 1991, its heritage continues to haunt current world politics. Most recently its echoes reverberated in the ongoing Russo-Ukraine war. Birth of the Cold War The conflict’s genesis was created in the final years of World War II, in the Yalta and Potsdam Conferences (1945) where visions for post-war Europe differed, and this led to tension between the USA and the Soviet Union. It was born out of ideological differences from the time of the Bolshevik Revolution of 1917, as the USA promoted democracy and free enterprise whilst the Soviet Union sought to expand its influence through socialist regimes in Eastern Europe. The USA and its Western allies wanted to extend democratic government and economic partnership while also building a world based on open markets with collective security. Meanwhile, the Soviet Union (under Joseph Stalin) desired a buffer zone in Eastern Europe and thus established socialist-oriented governments in order to protect themselves against future possible invasions. This move was fueled thanks to a fear compounded by the sheer devastation which the Soviet Union had suffered during World War II. This ideological divide led to the descent of the Iron Curtain over Europe that segregated the East from West, and set the stage for an international power struggle. The Marshall Plan, or the American attempt to rebuild post-war Europe, was both a humanitarian effort and a strategic measure to stem the tide of communist expansion. Meanwhile, the Soviet Union solidified its control over Eastern Europe, creating a bloc of pro-Moscow satellite nations. This division of Germany into East and West, and thus the construction of the Berlin Wall in 1961, were the most publicized reminders of the Cold War’s unwelcoming divide. The Arms Race and Nuclear Brinkmanship The arms race was the most defining feature of the Cold War, during which the USA and the Soviet Union built vast nuclear weapons that could annihilate the entire globe. Mutually Assured Destruction (MAD) held the superpowers in check so that open conflict wouldn’t spread like a rampaging wildfire. Otherwise had both nuclear-armed superpowers gone out of control, they would’ve inflicted wide-scaling catastrophes with horrific consequences. This fine balance of power was most severely tested in the 1962 Cuban Missile Crisis when the world teetered on the brink of nuclear war. On the other hand, the Cold War years were also marked by tremendous economic and technological transformation. The massive spending on defense and military technology gave rise to the Military-Industrial Complex, something which defined American economic policy for decades. Furthermore, Cold War competition also caused technological innovation (especially during the Space Race). The Soviet’s launching of Sputnik in 1957 marked the race’s start, as each of the superpowers registered milestones such as the first man in space by the Soviet Union (Yuri Gagarin, 1961) and the USA’s memorable Apollo 11 mission to the moon in 1969. Investments towards space technology not just established military capability but also generated innovations with wide civilian uses. Among these innovations include the invention of the internet and satellite technology. Proxy Wars: The Cold War Gets Hot Though the USA and the Soviet Union never came face-to-face in battle, they supported opposite sides of numerous proxy wars throughout the world. Such wars were often used as arenas for communism versus capitalism ideologies. The Korean War during 1950 to 1953 was the first significant proxy war which ended in a stalemate that divided Korea into North and South. The Vietnam War (which had major US involvement from the early 1960s to 1973) culminated in the fall of Saigon in 1975. The fall united all of Vietnam under communist rule backed by the Soviet Union and China. Elsewhere in the Middle East and Africa as well as Latin America, the Cold War was lived through in the form of coups, revolutions and insurgencies that more often than not lead to retraction of democratic ideals in the pursuit of broader strategic interests. These wars left lasting scars as numerous countries grappled with the legacies of Cold War intervention to this day. The Fall of the Soviet Union and the End of the Cold War The 1980s were a turning point when Soviet leader Mikhail Gorbachev introduced reforms like Glasnost (openness) and Perestroika (restructuring). Gorbachev’s efforts tried to activate the Soviet economy as well as reverse tension with the West, but unveiled deep rooted internal problems in the Soviet system. This eventually led to a domino effect that culminated in the collapse of the Soviet Union. As developments within went forward, the external Cold War tensions receded. The fall of the Berlin Wall in 1989 (a longstanding symbol of division) was a turning point as East and West Germany began the reunification process. In 1991, the Soviet Union dissolved, ending the Cold War through a combination of socio-economic instability, political reforms, nationalist movements and external pressures. USA became the world’s leading superpower as a result where numerous people had hopes of a new era of peace and cooperation. However, the heritage of the Cold War was far from being over. Lasting Influence on Modern Geopolitics The Cold War’s influence on modern geopolitics is extremely deep and widespread. The position of the USA as the world’s leading superpower is a direct result of having emerged victorious from the Cold War. Alliances and institutions formed during the Cold War (like NATO) continue to shape international politics even. NATO was established in the first place to counter the threat of Soviet expansion, and is now a primary political instrument for making

How the US Dollar Dominated Global Economy & Faces New Foes

Imagine a monetary system so mighty it fuels oil deals in the Middle East and coffee trades in East Africa. This financial powerhouse would be none other than the US dollar, the greenback that’s ruled the global economy for over a century. Born during the scrappy days of a fledgling America, the US dollar rose through wars, clever deals as well as sheer economic heft to become the world’s go-to currency. Yet in 2025, with BRICS nations pushing back in rebellion and economic tremors like a US credit downgrade shaking trust, the US dollar faces a new stormy horizon. Let’s trace the dollar’s epic climb from colonial chaos to global money monarch and explore why its crown could slip if mishandled. Birth of a New Currency The dollar’s story starts in the 1700s, when America was a patchwork of currencies ranging from Spanish pesos and British shillings to even wampum beads swapped in markets. The 1792 Coinage Act eventually birthed the dollar, pegged to silver and modeled on Spanish coins. The US dollar progressed further when one of America’s Founding Fathers and the first US secretary of the treasury Alexander Hamilton steered a national bank to stabilize it. Early days of finance were rough: Revolutionary War “Continental dollars” crashed so hard they became a joke—“not worth a Continental” was the negative idiom of the day. However, by the 19th century, the dollar gained traction as the US economy grew, but the British pound still ruled global trade. The Civil War brought greenbacks—paper money not backed by metal—sparking debates over trust that lingered into the 1900s. Pretty wild, huh? Just imagine a young nation’s currency finding its legs and growing in strength, but it wasn’t yet a global player. The US Dollar’s Road to Power From chaos comes opportunity, for the 20th century turned the tide. World War I made the US a financial powerhouse, lending gold to war-torn Europe and boosting the dollar’s cred. By World War II, with Europe in shambles, the 1944 Bretton Woods Agreement crowned the dollar as king: 44 nations pegged their currencies to it, tied to gold at $35 an ounce. The US held nearly 70% of global gold reserves, making the dollar the backbone of trade and savings. Then, when US President Nixon cut the gold link in 1971—so-called the “Nixon Shock”—the dollar could’ve tanked. Instead, a 1974 deal with Saudi Arabia made oil trades dollar-only, birthing the petrodollar system. Every oil-hungry nation had to hoard greenbacks which further cemented the US dollar’s reign. By 1980, the US dollar powered 80% of global transactions whilst being backed by America’s massive economy; nearly half global GDP in the 1960s. Fast forward to 2025, the dollar still dominates, holding 58% of global foreign exchange reserves and driving 96% of trade in the Americas… but trouble’s brewing. The Rise of New Global Foes BRICS—made up of Brazil, Russia, India, China, South Africa, plus new joiners like Saudi Arabia and Iran—are gunning for change. Fed up with US sanctions (such as those crippling Russia post-2022 Ukraine invasion) these nations are pushing for de-dollarization. China’s yuan hit 3% of global trade in 2024, up from 1% a decade ago, with Russia and India trading oil in rupees to sidestep dollar controls. The BRICS New Development Bank now offers loans in local currencies, and whispers of a BRICS currency float, though experts call it a pipe dream (at least for the time being as of August 2025). Furthermore, digital currencies like Bitcoin or China’s digital yuan, add pressure to the dollar; offering ways to bypass dollar-based systems in this changing age of the global economy. Politicians and economists alike rage about American sanctions driving BRICS to rebel, with analysts warning of a “dollar crash” if trust fades. Recent 2025 events tied to war and social disorder pile on the heat too. American-based credit rating company Moody’s downgraded the US credit rating in early 2025, citing ballooning deficits, while new US tariffs—some as high as 20%—sparked trade spats with China and the EU, hinting at a less open global market. Gold reserves climbed to 23% of global holdings, fueled by price surges, as some nations hedge against dollar risks. Yet the dollar’s still got some serious muscle: the US economy (still 24% of global GDP) dwarfs rivals, and its financial markets are unmatched for depth and trust as of yet. Forged in Chaos, Seasoned with Power No currency—yuan, euro, or crypto—matches the US dollar’s liquidity or stability for all these years. Though the BRICS nations combined push is real, their economies are still too tangled with the dollar to break free fast. After all, the American greenback’s a seasoned survivor; forged in chaos and holding firm grip on the global economy. Will the US dollar keep its crown? Only time will tell, but one thing’s for sure: the USD is one hell of a fighter. Header Image: Hundred dollar bills featuring one of the USA’s iconic Founding Fathers: Benjamin Franklin. By FFCU. Source: CC BY-SA 2.0. References:

Century-Old Swiss Lung Unlocks Spanish Flu Virus’s Secrets

In a dusty archive at the University of Zürich, a Swiss teenage victim’s preserved lung dating back to the 1918 Spanish Flu has spilled genetic secrets on one of history’s deadliest diseases. Swiss researchers (spearheaded by paleogeneticist Verena Schünemann at the University of Basel) managed to sequence the full genome of the 1918 flu virus using a clever new RNA recovery technique. This research in turn has expanded our understanding on how viruses evolve, and how we could prepare for future pandemic challenges—connecting a century-old tragedy to the challenges we face today. A Flashback into the “Spanish Flu” Virus Horror Though the 1918-1920 influenza pandemic has been known throughout history as the “Spanish Flu”, the naming is rather inaccurate since the virus may not even have started in Spain. Nonetheless, the pandemic was a world-spanning catastrophe. This virus has taken the lives of 20 to 100 million people; more so than World War I—which was happening at the same time. Unlike today’s flu which often hits the elderly hardest, the Spanish Flu claimed the lives of healthy young adults in just a matter of days. The pandemic’s origins are still a bit of a mystery. Some historians theorized it started in the USA, with the first recorded case taking place in Kansas. Meanwhile, other historians speculate that the virus has its genesis in Asia, carried over to the USA or in France by Vietnamese or Chinese laborers. Wherever its origins are, the result was an impactful tragedy that wrecked many diverse communities on an epic scale. The Spanish Flu’s first wave struck Switzerland during July 1918. During this timeframe, the pandemic killed thousands of lives in the country. Among the victims includes an 18-year-old from Zürich whose lung tissue would later become a scientific breakthrough in the present day. Tucked away in a formalin-filled jar at the University of Zürich’s Institute of Evolutionary Medicine, the teenager’s lung sample held a snapshot of the virus at the pandemic’s start. Schünemann’s team (an ensemble crew of researchers from Basel and Zürich) painstakingly pulled out the virus’s genetic material. This marks the first time anyone’s sequenced a 1918 flu virus genome from a Swiss sample; showing us how the virus tweaked itself to wreak havoc in Europe. By comparing it to samples from Germany and North America, they pieced together how this bug became so efficient at infecting humans. Here’s the most creepy part: the infection process back then is similar to what we’ve seen in modern pandemics. What Made the 1918 Spanish Flu Virus So Deadly? The 1918 virus was a master of destruction thanks to a few genetic tricks up its sleeves. The Swiss team spotted three notable mutations in the Zürich sample that turned it from a run-of-the-mill flu into a human-adapted nightmare. Two of these changes helped the virus slip past the body’s immune defenses by specifically dodging interferons—those proteins that act like the body’s first responders against viruses. This lets the virus leap from animals to humans more easily, a trait we’ve seen in later pandemics like the H5N1 bird flu. The third mutation souped up the virus’s hemagglutinin (HA) protein that works like a skeleton key to unlock human cells. This tweak made the virus stickier and more infectious in the respiratory tract. Here’s the kicker: these mutations were already in play by July 1918 during the pandemic’s first wave, and they stuck around through its deadlier second and third waves. If so, this means that the virus was already pre-wired for humans early on. Corresponding with earlier studies of North American samples (Taubenberger et al., 2005). By cross-checking the Swiss genome with sequences from victims in Berlin and Alaska, the team confirmed these mutations were indeed widespread. It’s no wonder the 1918 flu spread like a raging wildfire. So much so that the virus was able to overwhelm hospitals and morgues across continents. Ancient RNA’s Secrets “Ancient RNA is only preserved over long periods under very specific conditions. That’s why we developed a new method to improve our ability to recover ancient RNA fragments from such specimens.“ — Christian Urban, the first author of this study from the University of Zürich. Pulling genetic material from a 100-year-old sample is no mere cakewalk. Influenza viruses use RNA, which is notorious for being fragile compared to DNA…and falls apart quickly. Formalin preservation is great for keeping tissue intact, but the downside is that it often breaks RNA into tiny unreadable bits. The Swiss team, however—including first author Christian Urban from the University of Zürich—came up with a nifty new method to salvage and verify these RNA scraps. This lets them rebuild the virus’s full genome with impressive accuracy. Past efforts, such as sequencing the 1918 virus from frozen Alaskan bodies (Reid et al., 2000), needed near-perfect samples. This time, however, the Swiss team’s technique opens the door to studying RNA viruses in more common formalin-fixed specimens; which are sitting in medical archives all over the world. Working together with the Berlin Museum of Medical History at Charité gave the team access to more samples which makes their findings even richer. Prepping for Future Pandemics “Medical collections are an invaluable archive for reconstructing ancient RNA virus genomes.” — Frank Rühli, head of the University of Zürich’s Institute of Evolutionary Medicine, and co-author of this study. The 1918 flu exposed how unprepared the world was for a viral onslaught. Back then there were no preparations for vaccines nor antivirals. Just hope and makeshift hospitals. Today—with viruses like SARS-CoV-2 and H5N1 looming—understanding how past pathogens evolved is more important than ever. This Swiss study gives us a playbook for tracking viral mutations, and showing how a few genetic tweaks can turn a mild bug into a global menace. By combining genetic data together with historical records, the Swiss team is building tools to predict future pandemics. Their blend of paleogenetics, epidemiology and history offers a clearer structure for forecasting how viruses (especially of the RNA type) might evolve or mutate

Matawan Man-Eater Mystery: Revisiting the 1916 Shark Attacks

July 1916 remains an unforgettable moment in maritime history, when the coastline of New Jersey became the site of underwater horror. Over a span of twelve days, a series of shark attacks—two in open ocean waters and three in the brackish Matawan Creek—sent shockwaves across the nation. The mysterious predator, nicknamed the Matawan Man-Eater, spurred fear and fascination that still spark debates that endure to this day. While some theories attribute the shark attacks to a single predator, others theorize that multiple sharks may have been involved. As we revisit this incident, we explore the most compelling theories to uncover what might have drawn marine predators into such an unusual setting. Environmental Changes: A Disruptive Summer The summer of 1916 brought record-breaking heat to the American East Coast, with high temperatures transforming the aquatic environment. Such environmental transformations are known to destabilize ecosystems, thus triggering changes between predator and prey dynamics. Unusually warm waters near the coastline may have reduced the abundance of prey, leaving larger predators like sharks to travel farther in search of food. Matawan Creek, with its brackish waters and slower currents, could have appeared as a promising hunting ground. Moreover, experts today understand that temperature fluctuations can disorient marine species, causing them to venture into unfamiliar territories. While scientific data specific to the summer of 1916 is sparse, the idea of sharks responding to environmental stress remains plausible and has been observed in other shark-human encounters. Bull Sharks: The Freshwater Specialists Bull sharks are often considered possible suspects in the Matawan attacks because of their unique ability to thrive in both saltwater and freshwater. This is possible thanks to a special process in a bull shark’s physiology called osmoregulation, which helps them maintain the right balance of salt and water in their bodies. A key part of this involves their rectal gland which removes excess salt when they’re in the ocean, and their kidneys which help conserve salt and water when they move into freshwater. Their gills also play a role by helping them adjust to the changing environment. Bull sharks have even been known to swim far up rivers around the world, making it easy to see why they’re strong contenders for the Matawan attacks. Adding to their notoriety is their bold hunting behavior and adaptability. Bull sharks have been observed preying on anything from fish to birds to dolphins to even cannibalizing each other, and have been recorded attacking humans in the ocean. Their aggression in unfamiliar environments aligns with the incidents of 1916. While physical evidence (such as teeth fragments) was never definitively linked to a bull shark, their biological traits and behavioral patterns make them a leading culprit. Rogue Great White Shark: Fact or Fiction? The great white shark occupies a special place in this mystery, thanks to cultural narratives that have shaped its image as a terrifying apex predator. Following the attacks, a great white was captured off the coast of New Jersey and remains of humans were reportedly found from its stomach. This discovery led to widespread belief that this single shark was responsible for the Matawan attacks. However, modern science challenges the “rogue predator” theory. Great whites typically prefer open ocean habitats and are rarely found in estuarine environments like Matawan Creek. Their presence so far inland is considered highly unusual, casting doubt on whether the great white alone was behind the tragedy. Still, the 1916 events contributed significantly to the mythology surrounding this species, eventually inspiring Peter Benchley’s Jaws in 1974—a novel turned into a cinematic phenomenon that forever changed how society views sharks. Could There Be Multiple Sharks? While much of the focus has been on a single predator, some researchers suggest that the attacks might not have been the work of one shark at all. Instead, the geographical spread and timing of the incidents raise the possibility of multiple predators. This theory assumes that environmental stress—whether due to temperature, prey scarcity or other factors—brought several sharks into closer contact with human activity, resulting in the tragic encounters. By acknowledging the potential for multiple culprits, this perspective broadens the ecological narrative, pointing to how varied factors in marine ecosystems can converge to create such rare events. Wartime Speculative Theories The cultural context of 1916 gives rise to some of the more speculative explanations for the attacks. During World War I, fears of German U-boats patrolling the Atlantic were rampant. Some researchers believe that these submarines could have disturbed marine habitats, driving sharks closer to shore. While there is no scientific evidence to support this idea, it reflects the global tensions of the era and humanity’s tendency to attribute mysterious phenomena to broader crises. Other theories, ranging from migratory anomalies to behavioral quirks, have occasionally surfaced, but they remain outside the realm of scientific validation. The Enduring Legacy of the Matawan Shark Attacks The Matawan Man-Eater incident was transformative as it left a significant mark on humanity’s relationship with sharks. Before these attacks, sharks were considered little more than curious marine animals that cleaned up the seas. However, the events of 1916 reframed them as dangerous predators that brought widespread fear. This perception was later amplified by cultural milestones like Jaws and, more recently, Shark Week—an annual celebration of shark awareness that captivates audiences worldwide. In fact, Shark Week pays special homage to the Matawan events, reflecting on their lasting impact on shark research and conservation efforts. In truth, the Matawan Man-Eater incident stands out as a rare and highly unusual event in the history of human-shark interactions. Sharks are often misunderstood and unfairly labeled as mindless predators, yet the reality is quite different. The vast majority of shark species avoid humans and attacks are exceedingly rare. Most of the known attack encounters occur because sharks mistake humans for their natural prey (such as sea lions) or are simply curious—an unfortunate consequence of their environment and instincts, rather than deliberate aggression. The events of Matawan Creek in 1916 were an anomaly, likely driven by unique environmental factors

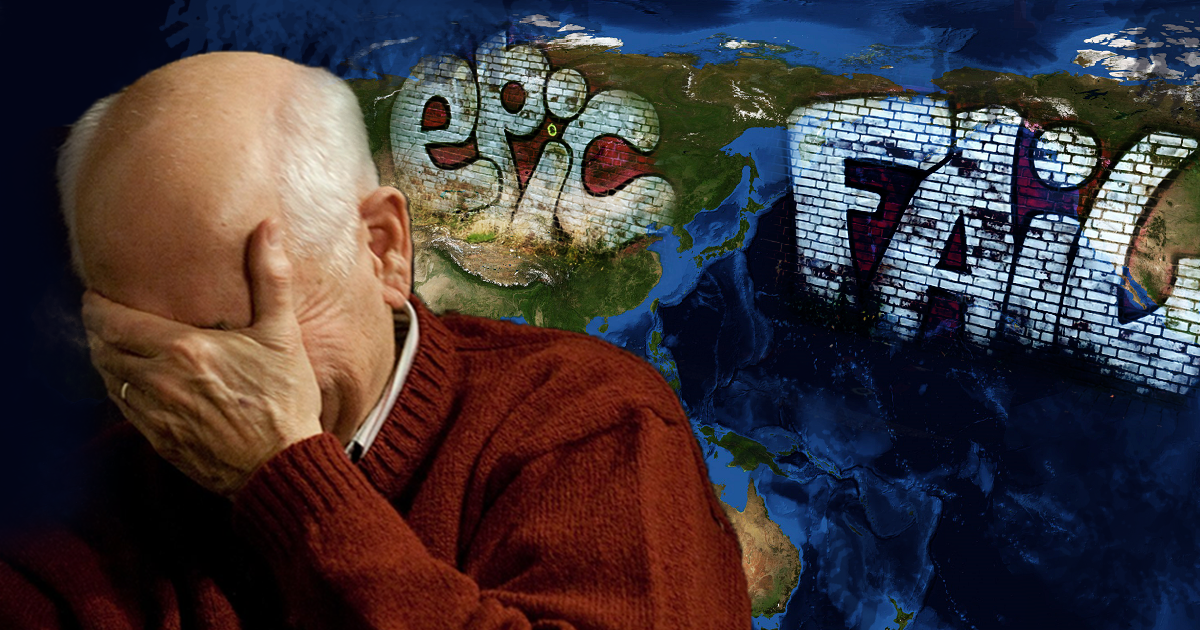

History’s Largest Political Alliances With ‘EPIC FAIL’ Moments

World history has witnessed ambitious political alliances take form to reshape geopolitics and foster cooperation. Some were born out of war, others from economic strategy while a few gathered together under ideological alignment. However, not all of history’s political alliances made it past the drawing board—or if they did, they fractured before their full potential could even unfold. These unfulfilled pacts offer tantalizing “what-ifs,” teasing us with glimpses of alternate realities where global power might have shifted; where wars may have been avoided; where history could’ve been written anew. Below are the superpowers that could’ve existed, but rather turned out to be moments of—as people these days would say—Epic Fail. The League of Nations: A Vision of World Peace That Cracked Early Established in 1920, the League of Nations was conceived as the world’s first permanent international organization dedicated to peace. The League was championed by US President Woodrow Wilson in the aftermath of World War I, with the aim to resolve disputes through diplomacy rather than warfare. In an ironic turn of events, however, Wilson’s own country never joined. The US Senate, wary of entangling alliances and concerns over national sovereignty, rejected the Treaty of Versailles—and with it, the League. Without the United States’ participation and lacking a standing military force, the League was largely ineffective. It failed to stop Japanese aggression in Manchuria (1931), Italy’s invasion of Ethiopia (1935), and Germany’s remilitarization of the Rhineland (1936). Had the USA ratified the treaty and joined the League, its presence might have added both authority and deterrence, potentially reshaping the fragile interwar order and delaying—or even preventing—World War II. Despite its epic fail, the League’s dissolution in 1946 paved the way for the United Nations, which sought to improve upon the League’s shortcomings with stronger mechanisms and broader participation. US-France Relations in the Cold War: Ambitions and Strains The United States and France share a historic bond, forged during the American Revolutionary War through agreements like the 1778 Treaty of Amity and Commerce. During the Cold War, as Soviet influence grew and nuclear risks loomed, both nations sought to strengthen economic and defense ties within NATO to counter the Eastern bloc. While no single formal treaty akin to the 1778 agreement was proposed, discussions for closer bilateral and multilateral cooperation persisted in the 1950s and 1960s to bolster Western unity. Tensions, however, emerged under French President Charles de Gaulle, who resisted US dominance in NATO and prioritized French sovereignty. Then in 1966, France withdrew from NATO’s integrated military command and developed an independent nuclear arsenal, straining the alliance. These moves limited the potential for deeper Franco-American collaboration. While a stronger partnership might’ve enhanced Western cohesion, broader factors—such as de Gaulle’s nationalism, economic challenges and diverging strategic priorities—complicated unity. Despite these strains, US-France cooperation endured in areas like intelligence and trade, shaping the Western bloc’s resilience throughout the Cold War. Not a complete epic fail. The Warsaw Pact’s Roots: Mistrust and Fears As the Iron Curtain divided Europe in the late 1940s, fleeting proposals for a Pan-European security framework briefly raised hopes of uniting East and West. Soviet leaders, including Foreign Minister Vyacheslav Molotov, suggested a collective security arrangement involving both the USSR and Western nations. Western powers, however, viewed these overtures as an epic fail of sincerity, suspecting they were ploys to delay NATO’s formation and weaken Western integration through efforts like the Marshall Plan. Mutual distrust ultimately prevailed, dashing prospects for a unified alliance. The West, skeptical of Soviet motives, forged ahead with NATO in 1949, solidifying the Cold War’s divide. The Soviet Union, perceiving NATO as a threat, responded by establishing the Warsaw Pact in 1955, consolidating Eastern Bloc nations after West Germany’s NATO membership. While a broader security pact might’ve softened the era’s ideological clashes, deep differences in ideology, economics, and geopolitics made such an outcome unlikely. Still, these early discussions showed that the path to division was not a complete failure, as both sides sought stability, albeit through rival blocs. SEATO’s Epic Fail: A Treaty With Teeth Missing The Southeast Asia Treaty Organization (SEATO), established in 1954 through the Manila Pact, aimed to halt communist expansion in Southeast Asia, drawing inspiration from NATO. Its members—the United States, United Kingdom, France, Australia, New Zealand, Pakistan, the Philippines and Thailand—formed a coalition to counter Soviet and Chinese influence. Yet, with only the Philippines and Thailand as regional members, and major powers like Indonesia, India, and Vietnam absent due to non-alignment or conflict, SEATO struggled to gain local legitimacy. Unlike NATO, SEATO’s charter lacked mandatory military commitments, requiring unanimous consent for action, which critics saw as an epic fail in design. This weakness was glaring during the Vietnam War, when SEATO failed to deliver a unified response, as members like France and the UK hesitated to engage fully. By 1977, shifting regional priorities led to SEATO’s dissolution. A stronger treaty with binding obligations and broader regional support might have bolstered its role as a counterweight to communism, but distrust of Western-led alliances and the non-aligned movement posed steep hurdles. The Trans-Pacific Partnership (TPP): A Modern Missed Opportunity The Trans-Pacific Partnership (TPP), negotiated among 12 Pacific Rim nations—including Japan, Australia, Vietnam and the United States—emerged as a bold 21st-century trade agreement. Signed in 2016, it sought to slash trade barriers, establish robust labor and environmental standards, and counter China’s growing economic clout in Asia. The TPP was hailed as the vibrant heart of US leadership in the region, but critics in the US saw it as an epic fail for American workers; fearing job losses and eroded sovereignty. In 2017, the Trump Administration withdrew the USA from the TPP, prioritizing domestic concerns over multilateral commitments. The remaining nations forged ahead with the Comprehensive and Progressive Agreement for Trans-Pacific Partnership (CPTPP), which took effect in 2018 but lacked the economic heft of the US economy. A fully ratified TPP with American participation might have reshaped global trade and bolstered American influence in the Asia-Pacific, though opposition within member states and

The 14th Century Pandemic That Made Today’s English Language

The Black Death of the 14th century remains one of history’s most devastating pandemics. This historical dark period claimed millions of lives and upended societal norms. Beyond its immediate impacts, this pandemic catalyzed long-term changes to European languages—particularly English—illustrating humanity’s ability to adapt their linguistic structures in times of crisis. This article explores the language transformations spurred by the Black Death; highlighting its role in elevating vernacular usage, enriching lexicons, reshaping socio-linguistic dynamics and its similarities with the recent cultural landscape during the Covid-19 pandemic. The Societal Disruption Caused by the Black Death Pandemic Emerging in 1346 and persisting into the early 1350s, the Black Death decimated up to half of Europe’s population. This catastrophic loss plunged societies into utter chaos –- disrupting agriculture, urban trade and feudal hierarchies. Before the plague, linguistic landscapes were defined by rigid social structures: Latin dominated scholarly and ecclesiastical discourse, Norman French reigned among England’s elite, and regional dialects served the common folk. The societal upheaval of the plague disrupted these norms, paving the way for vernacular languages to rise as instruments of necessity and inclusion. The Rise of Vernacular English: Language Evolution Through Crisis In pre-plague England, Norman French dominated the aristocracy, while Anglo-Saxon dialects lingered among the peasantry. As the plague caused widespread death among clergy and nobility, English began filling the void left by the French-speaking elites. This period saw the ascent of Middle English, characterized by simplified grammar and an enriched lexicon. Literary works such as Geoffrey Chaucer’s The Canterbury Tales demonstrated the language’s growing accessibility and versatility. Although many medical terms like “pestilence” became widely associated with the era, other terminology, such as “quarantine,” gained prominence during later plagues. Across Europe, similar linguistic shifts were evident. Dante Alighieri’s use of Italian in The Divine Comedy predated the Black Death but symbolized the increasing embrace of vernacularized literature. The pandemic accelerated this trend, as societal shifts eroded Latin’s exclusivity and elevated local languages for communication and documentation. Emergence of the Middle Class & Foundation for Modern English The dramatic reduction in population forced landowners to negotiate with a smaller workforce, empowering common folk and elevating their vernaculars. Although this contributed to broader linguistic evolution, attributing the rise of a linguistic middle class exclusively to the Black Death oversimplifies a complex historical phenomenon. The socio-linguistic upheavals triggered by the Black Death set the stage for Early Modern English, with richer vocabularies emerging alongside more standardized grammar structures. The invention of the printing press in the 15th century harnessed these shifts, further democratizing and consolidating language changes initiated during the pandemic. The Black Death’s linguistic legacy underscores how disasters can dismantle entrenched systems and foster innovation. From the rise of Middle English to the societal shifts influencing European tongues, the pandemic’s impact reveals humanity’s resilience in times of adversity. While distinct from Covid-19’s digital vernacular revolution, both pandemics demonstrate language’s enduring adaptability as a tool for connection and shared experience. Parallels of the Black Dearth & Covid-19 The Black Death and the Covid-19 pandemic, though separated by centuries, reveal striking parallels in their capacity to reshape the English language’s ever-evolving structure. During the Black Death, vernacular languages gained prominence as Latin’s dominance waned, driven by societal shifts and the reduced influence of ecclesiastical elites. Similarly, the Covid-19 era has seen the proliferation of digital vernaculars. Memes, emojis and abbreviated expressions have transcended linguistic boundaries; becoming universal tools of communication in a hyperconnected world. Much as medieval plagues democratized language by bringing colloquial speech to the forefront, Covid-19 has fostered new forms of accessibility and inclusivity in global discourse. Both eras also illustrate language’s adaptability in times of crisis. The Black Death introduced terms reflecting its grim reality just as Covid-19 has enriched modern lexicons with words like “social distancing,” “lockdown,” “vaxxed,” “essential businesses”, and “Zoom fatigue.” In both cases, languages evolved to encapsulate shared experiences, serving as a testament to humanity’s resilience and ingenuity. These linguistic transformations—that were born from adversity—remind us that even in the shadow of great challenges, language continues to do its magic. After all, the magic of language is to unite people, adapt to the times, and to keep on thriving towards a hopeful future. Header Image: A part of the painting “De triomf van de Doods” (or “The Triumph of Death”) by Flemish Renaissance artist Pieter Bruegel the Elder. Circa 1562. Source: Public Domain. References:

Key American Revolution Figures Who Reshaped the Modern World

Happy Independence Day, everyone. The American Revolution wasn’t just a game-changing moment in US history. It was furthermore a catalyst for the modern world stage’s transformation. The key figures who emerged from this time period didn’t just fight for independence—they also shaped the future of governance, diplomacy, human rights and even innovative progress across the world as a whole. Let’s explore the lives and legacies of these revolutionary legends as we discover how their contributions resonate in present-day societies. George Washington: The Reluctant General Turned Global Icon Imagine a man who didn’t seek power but was thrust into the role of commander-in-chief by the sheer force of his personality. George Washington’s impressive leadership in the Continental Army was vital in achieving American independence. As the first President of the United States, he set a powerful example by voluntarily stepping down after two terms, demonstrating that the transition of power could be done in a peaceful manner. This bold move not only defined the future of American democracy but also inspired other leaders like Francisco de Miranda and Simón Bolívar, who drew from Washington’s example in their struggles for freedom and democratic reform in Latin America to become free from the Spanish Empire. While Washington’s legacy is marked by complexities—his role as a slave owner and his initial resistance to ending slavery reflect the contradictions of his era—his contributions to democracy and global governance remain significant. His leadership and principles continue to influence modern societies, serving as a reminder of the enduring quest for freedom and justice. Thomas Jefferson: The Pen That Sparked Revolutions Thomas Jefferson’s eloquence in the Declaration of Independence captured the Enlightenment ideals of liberty, equality and self-governance. These words were not just a call to arms for the colonies—they became a manifesto for oppressed peoples worldwide. Though Jefferson was in France during the Constitutional Convention, his vision of a government by the people and for the people influenced the drafting of the Constitution which fueled democratic movements on European and Latin American soil. Yet, Jefferson’s legacy is marked by a haunting contradiction: while he advocated for revolutionary principles, he also owned slaves, embodying the moral complexities of his time. This duality reflects the broader historical struggle between his pioneering contributions to democracy and the ongoing challenge of addressing the injustices of his time. Despite these contradictions, Jefferson’s ideals of freedom and self-governance continue to inspire and fuel democratic aspirations today. Reminding us of the enduring power of visionary ideals and the shared journey toward justice and equality. Benjamin Franklin: The Polymath Who Piloted Progress With his wit and genius intellect, Benjamin Franklin was a maestro statesman. His diplomatic efforts in France were crucial in securing the military alliance that contributed to winning the American Revolutionary War. Franklin also played a key role at the Constitutional Convention, aiding in the creation of the US Constitution, which laid the foundations for modern governance. Although he owned slaves in his early life, Franklin’s views evolved in a dramatic and mature change of thought. In his later years, Franklin denounced slavery and became a vehement advocate for abolition. His commitment led him to call for the end of the slave trade, serve as president of the Pennsylvania Abolition Society, and work towards the gradual abolition of slavery. Thereby advancing the cause of emancipation and racial equality. Franklin was also a master inventor whose innovations, such as the lightning rod, improved living conditions and saved lives. His scientific achievements and intellectual pursuits fostered a spirit of inquiry and curiosity that shaped American innovation — one that continues to inspire progress worldwide. John Adams: The Architect of Independence John Adams was a passionate advocate of liberty who played a great role in igniting the flames of revolution and in crafting the Declaration of Independence. As a master diplomat, Adams secured essential loans and alliances—especially with the Dutch—that sustained the fledgling United States during its struggle. His innovative political theories and dedication to the rule of law deeply influenced the drafting of the US Constitution, embedding principles of separation of powers and checks and balances that became a global model. Adams’ vision extended beyond America’s borders, inspiring democratic frameworks in countries as diverse as post-revolutionary France and the newly independent Latin American states. His progressive stance on slavery, advocating for gradual abolition, highlighted his commitment to justice and equality. John Adams’ legacy is not only a cornerstone of American democracy but one that influences global governance on a profound level. Demonstrating the enduring power of principled leadership and visionary ideas. Alexander Hamilton: The Financial Visionary Alexander Hamilton laid the bedrock for America’s economic system. He crafted the national bank and introduced financial policies that stabilized the fledgling nation and were later woven into the Constitution. His vision for a strong central bank and sound public credit didn’t just anchor the American economy. It also set a precedent that would influence financial systems around the world. Nations looking to modernize their economies drew from Hamilton’s innovative ideas, which have helped shape global financial stability. However, Hamilton’s story is multifaceted. His fierce political rivalries, notably with Thomas Jefferson, and his tragic duel with Aaron Burr, mark the chaotic peaks of his career. While his contributions to economic policy are celebrated, his limited efforts toward abolition highlight the complexities of his legacy. His legacy, however, still endures as it shows how one man’s bold ideas can transform national economies and international finance on a massive scale. Marquis de Lafayette: The French Connection The Marquis de Lafayette’s dedication to the American Revolution and his role in the French Revolution highlight the deep connections between revolutionary ideals across the Atlantic. Although he did not directly contribute to drafting the US Constitution, Lafayette’s unwavering commitment to liberty and democratic governance was shaped by his American experiences on a personal level. His strong advocacy for democratic principles fueled the French Revolution, which in turn sparked a broader wave of reform and independence movements across Europe and Latin America. However, Lafayette’s

How One War Rewrote the English Language

On the crisp autumn day of October 14, 1066, a pivotal war unfolded in southern England. One that forever altered the course of English history, and also language. Picture this scene: William the Conqueror, the audacious Duke of Normandy, locking horns with Harold Godwinson, the last Anglo-Saxon king. Their conflict, known as the Battle of Hastings, was about who could claim the throne of England and have dominion over its people. Both men—certain of their divine right to rule—clashed with unyielding conviction. The outcome? A decisive Norman victory after Harold was killed, likely by an arrow to the eye or a fatal blow from Norman knights. This event toppled the Anglo-Saxon reign and set the stage for a linguistic revolution. Just who were the Normans, and how did their culture reforge the English language as we know it? Raiders to Royals to Shapers of English See, the Normans weren’t just French. They were also descendants of Vikings who had once ravaged France. After much political maneuvering from the French side and securing allegiances, these Vikings settled in French soil for good. They then adopted French customs while also converting from Norse Paganism to Christianity. This blend gave rise to the Normans—a fascinating mix of Norse Viking heritage and French culture. The Norman tongue was a dialect of Old French, enriched by both Old Norse and Latin influences. This was William’s mother-tongue, which he brought into England with sheer impact. After William’s victory, Norman French became the official language of the government and elite; while Latin remained the language of the church and scholars. However, Old English did not vanish overnight. It continued to live in the common folks’ lips while gradually evolving. Over time, the continuous blending of languages gave rise to Middle English. Norman French, along with its Latin roots, introduced a wealth of vocabulary, particularly in areas such as governance, art, literature, and cuisine. Words such as “court,” “judge,” “marriage,” “government,” “dinner,” “pork,” and “beef” became part of everyday language. Meanwhile, Old Norse already flavored Old English with gems such as “sky,” “law,” “cake,” “egg,” “knife,” and “husband.” The Past and Future of the English Language The aftermath of the Battle of Hastings created a rich linguistic tapestry, blending Anglo-Saxon roots with Viking vigor, French flair, and Latin influences. This vibrant mix laid the groundwork for Modern English, transforming it into the eclectic language we enjoy today. Just as Old English evolved from a blend of different tongues, Modern English continues to evolve, incorporating words and expressions from a variety of cultures. This ongoing evolution gives rise to unique dialects and sounds, from the hip phrases of urban slang to the hybrid languages of Pidgin. The Battle of Hastings wasn’t just a historic clash of swords; it was a monumental cultural showdown that sparked a revolution in language. This epic fusion of voices and civilizations created a dynamic, ever-changing mosaic of English that reflects the diverse influences shaping our world today. Whether through memes, trending hashtags, or the latest linguistic trends, English remains a living testament to our global and cultural interconnectedness. For the newer generations, this rich history adds depth to the everyday words and expressions we use. Celebrating a language that’s as manifold and evolving as we are. Top Image: Painting of “The Battle of Hastings in 1066” by François-Hyppolite Debon, Musee des Beaux-Arts, Caen (France). Source: Public Domain References

This Place Near Iran Affects World Oil Prices – From History to Consequences

There’s this narrow but vital place of water near Iran that has witnessed millennia of world maritime trade, diplomatic gambits and geopolitical rivalries. Stretching between the Persian Gulf and the Gulf of Oman, this specific waterway connects (and affects) major oil-exporting nations to world markets – influencing oil prices big time. This waterway is the Strait of Hormuz; known for having earned the title as the planet’s most important choke point for crude oil and liquefied natural gas. More info on current events later, but first.. let’s take a brief time travel to ancient history to start things up. A Strait of Ancient Empires The Strait of Hormuz’s history is just as ancient as the civilizations that bordered it. From the seafaring empires of Mesopotamia to the strategic maneuvers during the Age of Sail, the strait’s significance has evolved side by side with world trade dynamics. The Strait of Hormuz first gained prominence in the ancient world as a key route for spice and silk trade, connecting East Asia to Continental Europe. Persian empires, like that of Cyrus the Great’s, recognized the waterway’s strategic value and fortified its surrounding areas. Fast forward to the 20th century, the strait emerged as a focal point during the discovery of oil in the Gulf region. This newfound resource transformed its surrounding nations into global energy giants. The shipping lanes brimming with crude oil tankers became a lifeline for economies worldwide as a result. The “Tanker Wars” of the Iran-Iraq Conflict (1980–1988) The Iran-Iraq War brought unprecedented attention to the Strait of Hormuz. Attacks on oil tankers and merchant vessels—known as the “Tanker Wars“—became a grim reality as both nations sought to cripple each other’s oil-dependent economies. Despite the targeted strikes, the strait never saw a full closure, thanks to international naval interventions.Politicians like US President Ronald Reagan championed Operation Earnest Will to escort vessels, highlighting the strait’s global importance. Meanwhile, Iranian leaders like Ayatollah Khomeini viewed the waterway as a bargaining chip in their regional aspirations. Threats, Tensions & Oil Price Hikes in the Early 21st Century The early 2000s marked the resurgence of threats to close the Strait of Hormuz, often as a reaction to American-imposed sanctions on Iran. In 2012, during heightened tensions over Iran’s nuclear program, President Mahmoud Ahmadinejad hinted at leveraging the strait as a countermeasure against Western pressures. While these threats caused temporary spikes in oil prices, military coalitions ensured the continuation of maritime traffic. The Strait Today In 2025: On the Brink of Escalation The strait at present is a flashpoint of regional and international rivalries. In June 2025, tensions soared after US President Donald J. Trump ordered airstrikes on Iran’s nuclear facilities. Iran’s parliament responded by approving a motion to close the Strait of Hormuz—though it awaits final approval from the Supreme National Security Council and Supreme Leader Ayatollah Khamenei himself. International leaders, including the British Prime Minister and the Saudi Crown Prince, as well as Russian President Vladimir Putin and China’s President Xi Jinping have urged de-escalation and warned about serious consequences should the closure take place. US Vice President JD Vance has also chimed in saying that the closure of the Strait of Hormuz would be self-destructive to the economy of Iran overall. Meanwhile, the UN Secretary-General has called for diplomatic resolution in response to avoid such massive world-changing repercussions. The Consequences of the Strait’s Closure IF Iran were to block the Strait of Hormuz, its consequences would spread out like seismic ripples in a vast ocean, touching every corner of the world: A Story of Unresolved Tensions One could see the Strait of Hormuz as being the very heartbeat of global energy—its every pulse sending waves across the planet. This strait remains a pivotal stage for age-old rivalries and present-day power struggles. Meanwhile, its history serves as a testament to the delicate balance between cooperation and conflict; as well as trade and turmoil of numerous nations – even livelihoods. As the world watches the current escalation taking place, leaders must learn from history’s lessons—ensuring that this lifeline of global trade remains open and secure for generations to come. Header Image: Aerial view of the Strait of Hormuz – taken near Iran with its oil-shipping route. By NASA. Source: Public Domain. References:

Not Only Trump: History of President F-Bombs & Profanity – From Lincoln to Biden

WARNING: This article contains strong vulgarity and unbiased facts. We strongly advise to either turn away if you’re sensitive, or to enjoy with amusement if you don’t mind. For those who don’t mind, we bid you welcome. Please sit back, relax and keep reading: On June 24 2025, as Donald J. Trump, current President of the USA, prepared to board Marine One, he paused before a crowd of excited reporters. And thus he spoke, “We basically have two countries that have been fighting so long and so hard that they don’t know what the f*ck they’re doing.” You heard him, alright…this unexpected profanity burst through the usual political polish; going viral throughout social media in an instant. Some people condemned Trump’s profanity as unbecoming of the Oval Office, while many others hailed Trump’s attitude as refreshing and honest. Either way, Trump’s candid use of the F-Bomb sparked a rising question: Has a president ever sworn (the vulgar kind) in public before—and was Trump truly the first? A Playful Take: Why the F-Bomb Feels So Impactful There’s something about an unscripted curse that grabs our immediate attention. Imagine Lincoln delivering the Gettysburg Address… and then blurting “sh*t” on “the fields of… uh, you know.” That kind of incongruity jolts us out of reverence and into raw uncensored humanity. Trump’s F-bomb carries that very same jolt. It feels like overhearing an office boss gripe about a broken computer rather than hearing a scripted defense policy. When leaders drop the veil of formal diction, we glimpse into their unvarnished selves—raw emotions, frustration, and all their true colors. By leaning on a four-letter word, presidents risk criticism for crude language, but they also court a sense of authenticity. In this current age of highly controlled media and sophisticated mannerisms, a live slip-up becomes a magnetic moment—and spreads even faster than any other talking point. Trump’s F-Word in Context For clarity’s sake, Trump’s earlier quoted remark was about Israel and Iran’s extended conflict. To be even more specific, Trump was rather pissed off at the Israeli government at the time for provoking further conflict with Iran right after an already agreed ceasefire. He thrust the “f*ck” into an unscripted sound bite, saying: > “We basically have two countries that have been fighting so long and so hard that they don’t know what the f*ck they’re doing.” The incident highlighted several trends: Yet even this ostentatious moment wasn’t unprecedented. American presidents have long peppered speeches, interviews and private conversations with imperious oaths and salty expletives—revealing that blunt language and high office have coexisted since the republic’s early days. Profanity & Presidents: A Brief Overview Before diving into individual examples, it helps to understand why US presidents curse at all. Profanity can: Across eras, presidents have balanced the risk of offending decorum against the communicative power of a well-thrown F-Bomb. Here are some notable examples of presidents beyond just Trump who have used profanity during, before, and after their times in office. The 19th Century: Lincoln’s Fiery D-Word Profanity Abraham Lincoln was renowned for his sharp tongue and coarse sense of humor. Nonetheless, he took the effort to avoid using curse words in public during formal speeches, and it was also known that Lincoln would not tolerate profanity during meetings. However, anecdotes show that Lincoln would react with outbursts of furious profanity when under severe stress. Lincoln would label political opponents as “damned rascals” and would use the word “damn” with great furor when confronted by conflict or when his patience was tested. His passionate usage of the word “damn” would even make Lincoln himself feel uneasy once he regained his calm. After excoriating a politician with the d-word for instance, he once stated with personal shock, “God knows I do not know when I have sworn before.” Mid-Century Tirades: Truman and LBJ Harry S. Truman: “Dumb Son of a Bitch” In April 1951, President Harry S. Truman dismissed General Douglas MacArthur after MacArthur openly challenged White House policy on the Korean War. In interviews conducted and later published in the American writer Merle Miller’s 1973 biography Plain Speaking: An Oral Biography of Harry S. Truman, the president deadpanned under his breath: “I fired him because he wouldn’t respect the authority of the President. I didn’t fire him because he was a dumb son of a bitch, although he was, but that’s not against the law for generals. If it was, half to three-quarters of them would be in jail.” That muted, off-the-record barb perfectly captures Truman’s blunt, no-bullsh*t style—yet they also revealed the toll of global crises on a president who preferred plain‐spoken candor to diplomatic euphemisms. Lyndon B. Johnson: From “Piss” to Peril In early April 1965, shortly after Canadian Prime Minister Lester B. Pearson publicly denounced the US bombing of North Vietnam at a Philadelphia lecture, President Johnson invited him to Camp David for a private day‐long meeting. According to multiple firsthand recollections, LBJ greeted Pearson with unfiltered fury—shoving him back against a porch railing, gripping his lapels, and snapping, “Don’t come into my living room and piss on my rug!” Though no official record of that exchange survives, everyone agrees Johnson’s outburst reflected his intense pride in US policy. When they emerged the next morning for their joint press briefing, Pearson offered only measured remarks and, mindful of diplomatic decorum, refrained from repeating his earlier criticism. LBJ and the FBI Director at the time, J. Edgar Hoover, often clashed over the Bureau’s reach and Hoover’s secretive style. Still, Johnson decided it was wiser to retain Hoover than risk him turning against the administration—hence his oft-quoted line, “Better to have him inside the tent pissing out than outside the tent pissing in.” This blunt metaphor perfectly captures the cautious treatment that defined their uneasy alliance. Lyndon B. Johnson used his style of profanity to intimidate aides and foreign leaders alike. His swearing blended political theater with blunt negotiation tactics, underscoring his belief that colorful language could convey meaning faster than measured prose. Watergate